Inside every GPU equipped on the recently launched RTX 3080 or 3090 graphics cards is a second-generation ray tracing processing core and third-generation Tensor Cores for deep learning processing. Alongside this are the new streaming multiprocessors (SM) dedicated to providing smoother gaming experiences and higher graphics quality. For streamers and content creators, the RTX 30 series also offers specific features developed by Nvidia, which will be discussed in detail in this article.

However, it must be acknowledged that the RTX 30 series boasts several 'firsts': the first commercial graphics card equipped with GDDR6X VRAM, the first graphics card to support HDMI 2.1 signal output, enabling gaming at 4K 120Hz or 8K 60Hz, and the Founder’s Edition of the RTX 30 series is also the first generation card to be equipped with a dual axial flow cooling mechanism, providing efficient cooling for the already powerful GPU on the motherboard.

GA102 and GA104

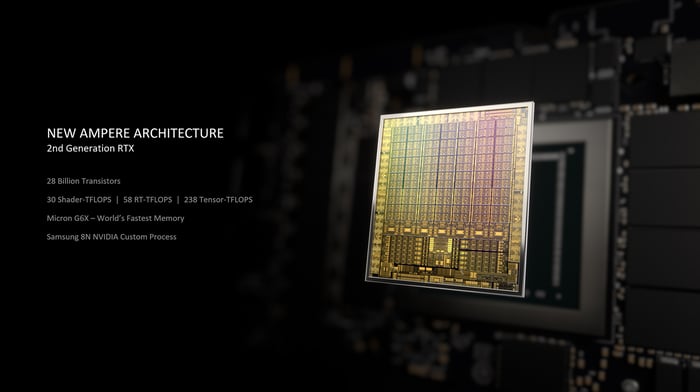

The transistor count on the Ampere architecture is nearly double that of the Turing architecture (TU102 GPU). The transistor density of GA102 is 44.6 million transistors per square millimeter of semiconductor chip, compared to 24.67 million transistors per square millimeter for TU102. This achievement is also attributed to Samsung's 8nm process.

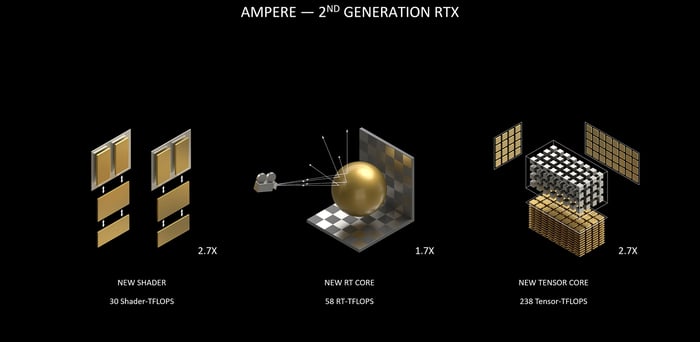

The transistor count on the Ampere architecture is nearly double that of the Turing architecture (TU102 GPU). The transistor density of GA102 is 44.6 million transistors per square millimeter of semiconductor chip, compared to 24.67 million transistors per square millimeter for TU102. This achievement is also attributed to Samsung's 8nm process. Each SM mentioned above is equipped with 4 tensor cores and 1 ray tracing core. Tensor cores handle deep learning tasks, one of the most important being DLSS processing on supported games. Instead of conventional anti-aliasing, DLSS uses deep learning algorithms to process low-resolution image samples, thereby upscaling the image to a higher resolution while maintaining graphic quality. As for ray tracing, in recent years, titles like Control, Battlefield V, and Metro Exodus have all recognized the potential of this image processing technology. Instead of relying on TPCs to handle ray tracing, the RTX 30 series has dedicated RT cores to process lighting, shadows, creating the most realistic virtual worlds.

Each SM mentioned above is equipped with 4 tensor cores and 1 ray tracing core. Tensor cores handle deep learning tasks, one of the most important being DLSS processing on supported games. Instead of conventional anti-aliasing, DLSS uses deep learning algorithms to process low-resolution image samples, thereby upscaling the image to a higher resolution while maintaining graphic quality. As for ray tracing, in recent years, titles like Control, Battlefield V, and Metro Exodus have all recognized the potential of this image processing technology. Instead of relying on TPCs to handle ray tracing, the RTX 30 series has dedicated RT cores to process lighting, shadows, creating the most realistic virtual worlds. On the RTX 3080, the diagram clearly depicts a 5MB L2 cache, while for the RTX 3090 it's 6MB shared across all GPC clusters. The GA102 on the RTX 3080 is equipped with 10 32-bit controllers and a 320-bit bus, whereas on the RTX 3090, it's 12 32-bit controllers with a 384-bit bus.

Similarly, with the GA104 GPU on the RTX 3070 set to launch on October 29th. This GPU features 46 SMs, totaling 5888 CUDA cores, 184 Tensor cores, and 46 RT cores. The GA104 boasts a 4MB L2 cache shared across all GPC clusters, along with 8 32-bit controllers, resulting in a 256-bit memory bandwidth.

On the RTX 3080, the diagram clearly depicts a 5MB L2 cache, while for the RTX 3090 it's 6MB shared across all GPC clusters. The GA102 on the RTX 3080 is equipped with 10 32-bit controllers and a 320-bit bus, whereas on the RTX 3090, it's 12 32-bit controllers with a 384-bit bus.

Similarly, with the GA104 GPU on the RTX 3070 set to launch on October 29th. This GPU features 46 SMs, totaling 5888 CUDA cores, 184 Tensor cores, and 46 RT cores. The GA104 boasts a 4MB L2 cache shared across all GPC clusters, along with 8 32-bit controllers, resulting in a 256-bit memory bandwidth.Ampere SM: FP32 processing performance doubles

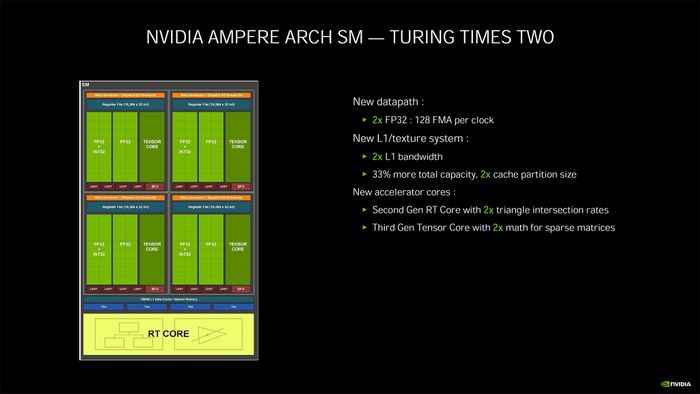

Perhaps no one explains this more aptly and understandably than Tony Tamasi, Nvidia's Vice President of Technology: “One of the goals in designing SMs on the Ampere architecture is to achieve double the FP32 processing speed compared to SMs on Turing GPUs. To accomplish this, the Streaming Multiprocessors of the Ampere GPU are designed with new data paths to handle FP32 and INT32. One data path in each partition consists of 16 CUDA FP32 cores, with each processing clock capable of 16 FP32 operations. Another data path consists of 16 CUDA FP32 cores and 16 CUDA INT32 cores. The result of this new design is that each SM partition on the Ampere GPU can process either 32 FP32 operations or 16 FP32 + 16 INT32 operations per clock. Four SM partitions combined can process 128 FP32 operations per clock, or 64 FP32 + 64 INT32 operations, doubling the processing performance over Turing GPU SMs.” Doubling the FP32 processing performance helps increase the efficiency of graphics tasks, common algorithms. Shader tasks in games are typically FP32 algorithm operations such as FFMA, FADD, or FMUL, combined with simpler integer operations for data access, floating-point comparison, or setting minimum/maximum values for processing results,… One of the most evident advantages of doubling the FP32 processing performance is the ability to process ray tracing denoising shaders.

Doubling the computing performance means doubling the supported data paths, which is why the SMs on the Ampere GPU also double the memory and L1 cache performance for each SM. The total L1 cache bandwidth on the RTX 3080 is 219 GB/s compared to 116GB/s on the RTX 2080 Super.”

Doubling the FP32 processing performance helps increase the efficiency of graphics tasks, common algorithms. Shader tasks in games are typically FP32 algorithm operations such as FFMA, FADD, or FMUL, combined with simpler integer operations for data access, floating-point comparison, or setting minimum/maximum values for processing results,… One of the most evident advantages of doubling the FP32 processing performance is the ability to process ray tracing denoising shaders.

Doubling the computing performance means doubling the supported data paths, which is why the SMs on the Ampere GPU also double the memory and L1 cache performance for each SM. The total L1 cache bandwidth on the RTX 3080 is 219 GB/s compared to 116GB/s on the RTX 2080 Super.”GDDR6X: Weaponizing Data Bandwidth to Nearly 1TB/s

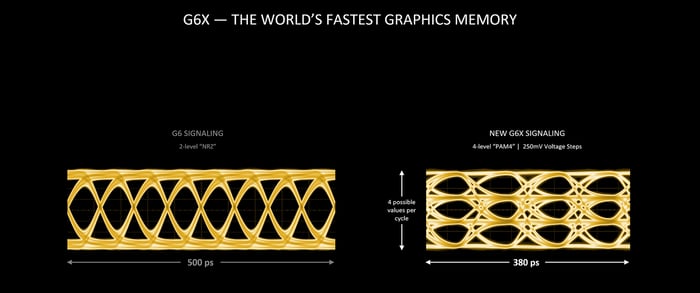

If Ampere GPU was a beast, then the GDDR6X VRAM produced by Micron equipped on the RTX 3080 and 3090 also helps make gaming performance faster and stronger than the previous generation of graphics cards. Firstly, the new VRAM technology allows processing twice the amount of input and output data. On the RTX 3090, GDDR6X helps the card achieve a data bandwidth of 1TB/s, thereby contributing to processing high-resolution textures and character models sharply enough to play games at 4K or 8K resolutions. Micron's GDDR6X itself achieves theoretical speeds of up to 21 Gbps, although on the RTX 3090, the VRAM speed is 19.5 Gbps, but it's still enough to impress gamers diving into the stunning virtual worlds of their favorite games.

Micron's GDDR6X itself achieves theoretical speeds of up to 21 Gbps, although on the RTX 3090, the VRAM speed is 19.5 Gbps, but it's still enough to impress gamers diving into the stunning virtual worlds of their favorite games.DLSS 2.0

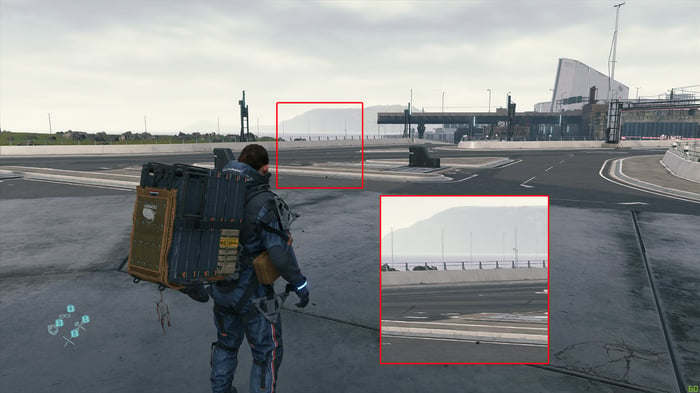

Thanks to the support of Deep Learning Super Sampling (DLSS), Nvidia has been able to harness the power of AI to assist in the rendering process, creating what's known as super resolution. Here, only a few pixels need to be rendered, and then AI is used to reconstruct the images with higher sharpness and resolution without requiring as much hardware as before. Nvidia states that on GPUs equipped with Tensor cores designed to run AI tasks, DLSS is used to boost frame rates while still ensuring beautiful, crisp images in games. This allows users to push ray tracing settings to the highest possible levels while also increasing the resolution. Curious how amazing DLSS is? Take a look at these two screenshots I captured while reviewing the PC version of Death Stranding. The left image is with DLSS, and the right one is with TAA + FidelityFX Sharpening:

DLSS 2.0 delivers image quality equivalent to native resolution while only needing to render 1/4 or 1/2 of the pixels. NVIDIA claims this technology uses temporal feedback techniques to enhance image detail and sharpness while stabilizing each frame. With DLSS 2.0, AI utilizes Tensor cores more efficiently to double computational speed compared to before, thereby boosting frame rates and overcoming previous limitations on GPU, Game Settings, and resolution.

DLSS 2.0 delivers image quality equivalent to native resolution while only needing to render 1/4 or 1/2 of the pixels. NVIDIA claims this technology uses temporal feedback techniques to enhance image detail and sharpness while stabilizing each frame. With DLSS 2.0, AI utilizes Tensor cores more efficiently to double computational speed compared to before, thereby boosting frame rates and overcoming previous limitations on GPU, Game Settings, and resolution.HDMI 2.1 and AV1 Codec

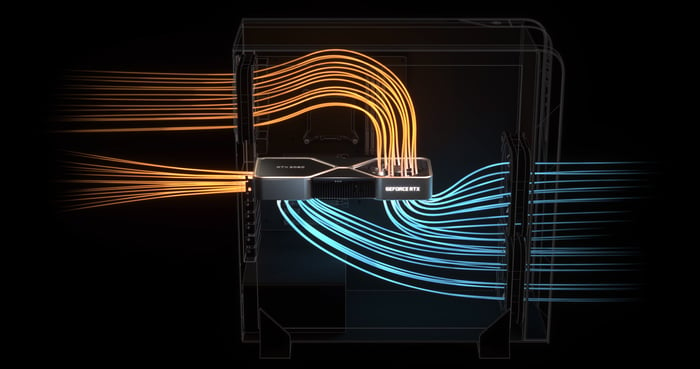

The rapid evolution of the global TV industry has outpaced the development of HDMI connection standards. While 4K and 8K displays are becoming increasingly common, older connection cables and standards like HDMI 2.0 struggle to meet the demand. With HDMI 2.0, the image output to the screen is limited to 4K HDR 98Hz. That's why the RTX 30 series supports HDMI 2.1 standard, with a bandwidth of 48 Gbps, enabling 8K image output to the screen with just one HDMI cable and allowing gaming at 4K 120Hz, or 8K 60Hz. Additionally, the support for AV1 standard by the RTX 30 series will also allow streamers to transmit image signals to their streaming channels with up to 50% reduced bandwidth compared to the H.264 standard, enabling 4K game streaming, which has been quite rare until now.Dual axial cooling

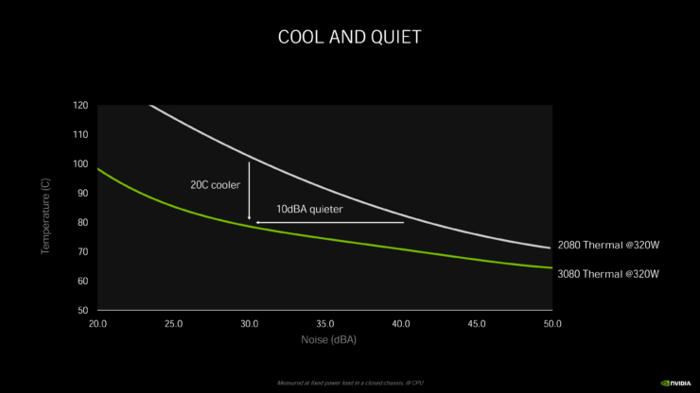

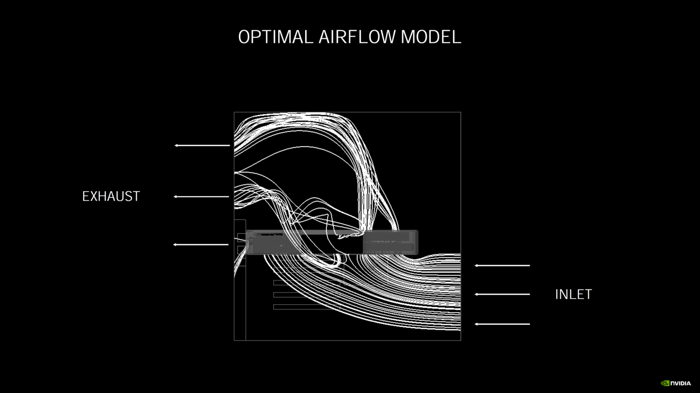

What truly impresses me is the heatsink system and the pair of much more complex heat spreaders compared to the RTX 2080 Ti, equipped by Nvidia for the RTX 3080 and 3090. Nvidia has opted for a dual push-pull fan system along with a nano carbon-coated aluminum alloy heatsink. The bottom fan draws in fresh air, while the top fan blows air out to cool the GPU. Nvidia claims that this system increases cooling airflow by 55% and is much cooler than the GPU on the RTX 2080 Ti. Additionally, the triple-slot PCIe design helps the RTX 3090 operate 10 times quieter than the previous generation RTX Titan.