Despite receiving health advice, they find these therapy sessions not genuinely helpful.

Officially released late last year, ChatGPT immediately became a sensation, praised as one of the most impressive technological advances of the year.

This artificial intelligence (AI) chatbot can generate text on almost any topic, from crafting poetry in Shakespearean sonnet form to explaining complex mathematical theorems in language understandable to a 5-year-old.

Within a week, ChatGPT had over a million users. Statistics show that ChatGPT reached 10 million users in just 40 days, surpassing the initial growth rate of social media platforms like Instagram (which took 355 days to reach 10 million registrations).

OpenAI, the software development research company behind ChatGPT, is attracting significant investment, including a $10 billion contribution from Microsoft. It wouldn't be surprising if, in the near future, OpenAI secures a spot among the world's most valuable AI companies.

Efforts to curb harmful content

ChatGPT operates by scanning text on the internet and providing real-time results. Simply ask a question, and the tool will deliver results instantly.

ChatGPT's output can be clever and adaptive, sometimes changing responses based on user prompts. However, due to its developmental stage, the results can be controversial, even if the interpretations sound convincing.

ChatGPT's predecessor, GPT-3, also possessed impressive information synthesis capabilities. However, due to the vast amount of internet data it ingested, operators couldn't fully control all the inaccurate and harmful information. The application struggled to sell due to tendencies towards violent, gender and racial bias. Estimates suggest that even with hundreds of people, it would take decades to manually sift through the massive dataset.

As a response, a more advanced version of GPT-3 emerged—ChatGPT—with better capabilities to filter out harmful information. However, it is not entirely 'clean' as harmful content related to racial discrimination, misogyny, hostility, violence, and crime still surfaces. OpenAI's CEO, Sam Altman, admits that ChatGPT is on a journey of community integration, akin to any other AI, causing controversy and not immune to misuse risks.

OpenAI collaborates with Sama, a San Francisco-based company. Sama hires content moderators from Kenya, Uganda, and India. Despite promoting itself as an ethically-driven AI company that has helped over 50,000 people escape poverty, an examination of internal documents by Time magazine—including salary slips and anonymous interviews—concluded that data labeling employees are paid only between $1.32 and $2 per hour depending on experience and performance, with the highest being $3.74 per hour after taxes.

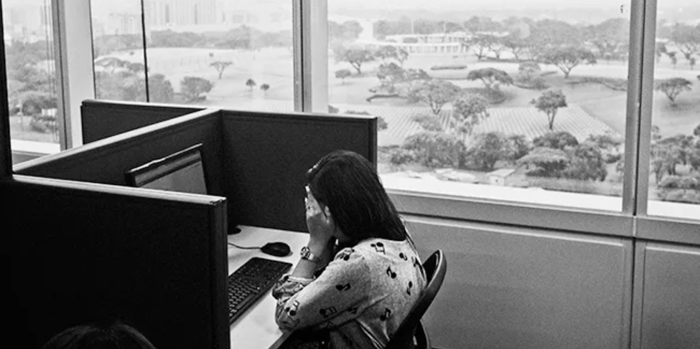

Despite daily exposure to harmful content on violence, sex, and self-harm, they are only paid meager salaries. The untold story of ChatGPT's anonymous workforce sheds light on the less heard side of the AI industry.

According to the Partnership on AI, an organization affiliated with OpenAI, human resources are becoming blurred partly because there is a push to emphasize and celebrate the independence of artificial intelligence.

The Sama and OpenAI Agreement

In an economy slowing down amid a recession backdrop, investors have poured billions into the 'new generation AI,' optimistically believing that text, images, videos, and sounds generated by computers will reshape the business landscape across various industries and crucial fields like creative arts, law, programming, or computer technology.

Contrary to the glitter and promising future, there is a group in the southern hemisphere struggling to contribute to a billion-dollar empire but receiving only minimum wages.

According to the employment contract between OpenAI and Sama, OpenAI will pay a rate of $12.50 per hour to Sama, which is 6 to 9 times less than what Sama employees actually receive. The spokesperson explains the discrepancy: 'The $12.50 rate for the project covers all expenses, such as infrastructure costs, salaries, and benefits for collaborators as well as analysts.' Because it's distributed this way, the amount received by employees is much less.

In the working process, about thirty employees are divided into three teams, each focused on categorizing different types of content. Employees interviewed revealed they have to read and categorize 150 to 250 pieces of text during each 9-hour shift. All suffer mental trauma from the job. Despite receiving health counseling, they find these therapy sessions not genuinely helpful.

An employee at Sama tasked with reading and categorizing texts for OpenAI told TIME magazine that he often experiences hallucinations when reading morally deviant sexual content. 'You read such content throughout the week, and by the end of the week, your mind has been worn down.' he said.

Due to the overwhelming workload and potential severe mental harm to employees, by February 2022, Sama terminated its collaboration with OpenAI, eight months earlier than planned.

The decision to end ties with OpenAI also means Sama's employees no longer have to grapple with harmful texts and images, but their livelihoods are affected. Most employees are shifted to other lower-paying jobs, with a few losing employment. Sama itself has to navigate through a public relations crisis.

In addition to OpenAI, Sama also works with Facebook. However, earlier this year, Sama decided to cancel all content moderation-related jobs. The company will not renew the $3.9 million content moderation contract with Facebook, resulting in the loss of around 200 jobs in Nairobi, Kenya.

Andrew Strait, a researcher of AI operational principles, noted: 'ChatGPT and similar models deliver impressive but not magical results. These tools still rely on vast supply chains as sources of human labor and data collected from the internet, much of which is used without consent. These are fundamental, serious issues that OpenAI has yet to address.'