HDR, or "High Dynamic Range," significantly enhances video quality by boosting the contrast between bright and dark areas, as well as broadening the color range and bit depth. HDR delivers a more vibrant, lifelike image. But the question remains: is it beneficial for gaming? This article from Mytour provides all the answers.

Is HDR a good fit for gaming?

HDR can be a great choice for gamers who appreciate stunning, cinematic visuals. However, if you're more into competitive gaming, or playing titles that lack HDR support, or if you don’t have a monitor that fully supports HDR, it might not be worth the investment.

Steps

Is HDR suitable for gaming?

- High-quality visuals: If you're into AAA titles with cinematic-level graphics, HDR could be a great choice. It brings stunning visuals to life. However, if you prefer competitive gaming, HDR might not be as essential.

-

Game compatibility:

Whether or not HDR is worthwhile depends on the games you enjoy. Many older titles (pre-2017) don’t support HDR, and retro-style games like Undertale and Minecraft don’t require HDR, as they focus on a different aesthetic.

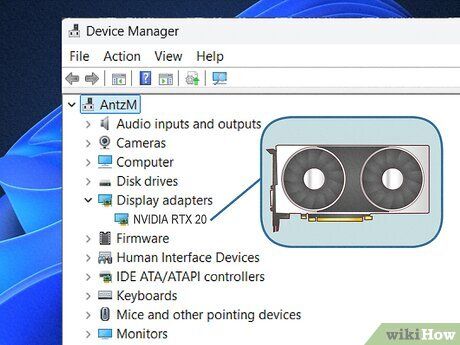

- Required equipment: To fully enjoy HDR, you'll need a capable PC with a compatible graphics card and CPU or a console that supports HDR. Additionally, you'll need a monitor or TV that’s HDR-compatible. Not all HDR displays are created equal—budget monitors might not deliver the same image quality as premium HDR models with more advanced features.

What exactly is HDR?

- HDR10: HDR10 is one of the main standards for HDR content. It's widely supported across HDR displays and streaming services. An open standard, it uses "static metadata," which means the brightness and color settings stay the same across all content.

- HDR10+: HDR10+ was developed by Samsung and is also an open standard. Unlike HDR10, it uses "dynamic metadata," adjusting the brightness and color profile for each scene, allowing for more nuanced detail in both bright and dark scenes.

- Dolby Vision: Dolby Vision, created by Dolby, also utilizes dynamic metadata for adjusting brightness and color on a per-scene or even per-frame basis. It can adapt to your display's capabilities in real-time. Unlike HDR10 and HDR10+, Dolby Vision isn’t an open standard, and content creators need a license to use it.

- HGL: Hybrid Log Gamma (HGL) was developed by the BBC and Japan’s NHK. It doesn’t use metadata but expands on the standard gamma curve used by SDR TVs, making it backward-compatible with SDR displays. While it’s an open standard, it’s not as widely used and doesn’t offer the same depth in dark scenes as other HDR formats.

What equipment do you need for HDR gaming?

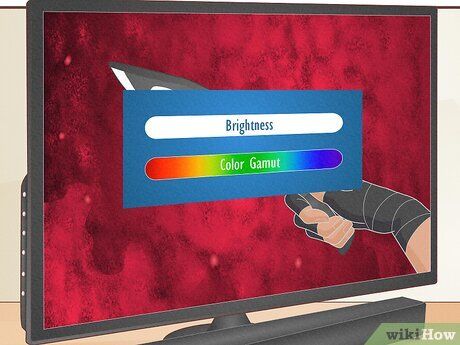

- Support for multiple HDR formats: A good HDR monitor or TV should support at least HDR10 and Dolby Vision. Bonus points for HDR10+ and HGL support, but note that supporting a format doesn’t guarantee that the monitor can fully utilize its potential.

- Peak brightness: The brightness of a monitor is measured in nits or cd/m2. A quality HDR display should hit a peak brightness of 600 cd/m2 or higher.

- Black levels: Black levels refer to how dark a screen can get. For instance, Dolby recommends black levels around 0.005 nits or less, while Intel suggests a minimum of 0.44 nits per millimeter.

- Color depth: While standard monitors offer an 8-bit color depth (16.7 million colors), true 10-bit monitors can display up to 1.07 billion colors. Many monitors marketed as 10-bit are actually 8-bit+2-bit (dithered), offering better quality than regular 8-bit monitors but not true 10-bit.

- Color space: Color space refers to the range of colors a display can show. While standard monitors use sRGB, HDR monitors typically use the broader DCI-P3 color space. A top-tier HDR display should cover at least 90% of the DCI-P3 space.

- OLED vs. LED with local dimming: While LED-backlit monitors are common, OLED screens offer superior contrast as each pixel lights up independently, eliminating the need for backlighting. Local dimming zones on LED monitors help achieve better contrast in dark scenes by dimming or turning off specific backlight areas.

- Refresh rate: Refresh rate affects motion smoothness in games. Most new consoles run at 60 Hz, but PC gamers often aim for 144 Hz or higher for faster frame rates. However, high-refresh HDR displays are expensive, often costing two to three times more than their standard counterparts.