Most types of computer memory are designed for temporary data storage. Explore images showcasing the inner workings of computer memory. Brandon Goldman / Getty Images

Most types of computer memory are designed for temporary data storage. Explore images showcasing the inner workings of computer memory. Brandon Goldman / Getty ImagesIt's fascinating to think about the variety of electronic memory types we encounter every day. Many of them have become key terms in our everyday language:

- RAM

- ROM

- Cache

- Dynamic RAM

- Static RAM

- Flash memory

- Memory Sticks

- Virtual memory

- Video memory

- BIOS

You’re likely aware that the computer you use has memory, but did you know that many of the electronic devices you interact with daily also contain some form of memory? Here are just a few examples of items with memory:

- Cell phones

- PDAs

- Game consoles

- Car radios

- VCRs

- TVs

In this article, you’ll explore why there are so many types of memory and what these terms mean. Let’s begin with the basics on the next page: What exactly is the role of computer memory?

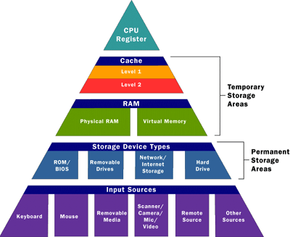

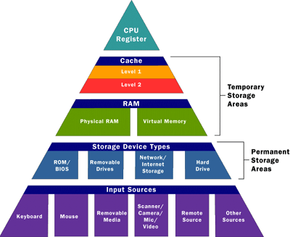

Computer Memory Basics

Although memory refers to any form of electronic storage, it is most commonly used to describe fast, temporary storage. Without memory, your computer's CPU would need to repeatedly access the hard drive for each piece of data, slowing down its performance. By storing information in memory, the CPU can retrieve it much faster. Most types of memory are designed for temporary data storage.

The CPU retrieves data from memory in a well-defined order. Whether the data comes from permanent storage (the hard drive) or input sources (like the keyboard), it is typically loaded into random access memory (RAM) first. The CPU may then store data it frequently uses in a cache and retain important instructions in a register. We'll delve deeper into caches and registers later.

All the components in your computer, including the CPU, hard drive, and operating system, function together like a team, with memory playing a vital role. From the moment your computer powers on until it shuts down, memory is continuously in use by the CPU. Let’s walk through a typical process:

- You power on the computer.

- The computer loads data from read-only memory (ROM) and runs a power-on self-test (POST) to verify the major components are working correctly. During this test, the memory controller quickly checks all memory addresses with a read/write operation to ensure the memory chips are error-free. Read/write means data is written to a bit and then read from that bit.

- The computer loads the basic input/output system (BIOS) from ROM. BIOS provides fundamental details about storage devices, boot sequences, security, Plug and Play (auto device recognition), and a few other elements.

- The computer loads the operating system (OS) from the hard drive into RAM. Critical parts of the operating system remain in RAM while the computer is on, enabling the CPU to access it instantly, which boosts performance and system functionality.

- When you launch an application, it is loaded into RAM. To save on RAM usage, many applications load only the core components initially and pull in additional parts as needed.

- Once an application is running, any files opened in it are also loaded into RAM.

- When you save a file and close the application, the file is written to the selected storage device, and both it and the application are removed from RAM.

In the process above, each time data is loaded or accessed, it is placed into RAM. This means it is stored temporarily in the computer’s memory so the CPU can retrieve it more quickly. The CPU continuously requests, processes, and writes data back to RAM in a cyclical manner. In most computers, this data exchange between the CPU and RAM occurs millions of times each second. When an application is closed, it, along with any open files, is typically purged (deleted) from RAM to free up space for new data. If unsaved changes are not written to a permanent storage device before the purge, they are lost.

A common question about desktop computers is, "Why does a computer require so many different memory systems?"

Different Types of Computer Memory

A standard computer typically includes:

- Level 1 and level 2 caches

- Standard system RAM

- Virtual memory

- A hard drive

Why are there so many? Understanding this can give you valuable insights into how memory works!

High-performance CPUs require fast access to vast amounts of data to optimize their capabilities. If the CPU cannot retrieve the data it needs, it halts and waits. Modern CPUs, which run at speeds of approximately 1 gigahertz, are capable of processing immense quantities of data—potentially billions of bytes each second. The challenge for computer engineers is that memory fast enough to keep up with a 1-gigahertz CPU is prohibitively expensive—far too costly to be used in large amounts.

Computer engineers have addressed the cost issue by implementing a system of "tiered" memory—utilizing expensive memory in limited amounts and supplementing it with larger quantities of more affordable memory.

The most economical type of read/write memory widely used today is the hard disk. Hard disks offer vast amounts of affordable, permanent storage. You can purchase hard disk space for just a few cents per megabyte, but reading a megabyte from a hard disk can take almost a second. Since hard disk storage is so cheap and abundant, it forms the final level of a CPU’s memory hierarchy, known as virtual memory.

The next tier in the memory hierarchy is RAM. We cover RAM in detail in How RAM Works, but there are a few important aspects of RAM to mention here.

The bit size of a CPU indicates how many bytes of data it can retrieve from RAM at once. For instance, a 16-bit CPU can handle 2 bytes at a time (1 byte = 8 bits, so 16 bits = 2 bytes), while a 64-bit CPU can process 8 bytes simultaneously.

Megahertz (MHz) measures a CPU's processing speed, or clock cycle, in millions per second. For example, a 32-bit, 800-MHz Pentium III can theoretically process 4 bytes at once, 800 million times each second (perhaps more with pipelining)! The memory system is designed to support these demands.

System RAM alone cannot keep pace with the CPU’s speed, which is why a cache is needed (we’ll discuss this in detail later). However, faster RAM always improves performance. Most modern chips run at a cycle rate of 50 to 70 nanoseconds. The read/write speed of RAM generally depends on the type of memory used, such as DRAM, SDRAM, or RAMBUS. We will explore these different memory types further.

Let’s start by discussing system RAM.

System RAM

System RAM speed is determined by bus width and bus speed. Bus width indicates how many bits can be transferred to the CPU at once, while bus speed refers to how often bits can be transmitted each second. A bus cycle happens every time data moves from memory to the CPU. For instance, a 100-MHz 32-bit bus can theoretically send 4 bytes (32 bits divided by 8 = 4 bytes) to the CPU 100 million times per second. In contrast, a 66-MHz 16-bit bus can transfer 2 bytes 66 million times per second. By increasing both the bus width from 16 bits to 32 bits and the speed from 66 MHz to 100 MHz, data flow to the CPU triples (from 132 million bytes to 400 million bytes per second).

In practice, RAM doesn’t always run at peak speed due to latency. Latency refers to the number of clock cycles required to retrieve a bit of data. For example, RAM rated at 100 MHz can send a bit in 0.00000001 seconds, but it may take 0.00000005 seconds to initiate the read process for the first bit. To overcome latency, CPUs employ a special method called burst mode.

Burst mode relies on the assumption that the CPU will request data from sequential memory cells. The memory controller expects that the CPU will continue working with data from a series of memory addresses, so it reads multiple consecutive bits of data at once. This setup means that latency only affects the first bit, while successive bits are retrieved much faster. The rated burst mode of memory is typically represented by four numbers separated by dashes. The first number indicates the clock cycles required to start a read operation; the following three numbers show how many cycles it takes to read each subsequent bit in the row, also called the wordline. For instance, a 5-1-1-1 burst mode means it takes five cycles to read the first bit and one cycle per subsequent bit. Lower numbers indicate better performance.

Burst mode is often paired with pipelining, another technique that helps reduce the impact of latency. Pipelining organizes data retrieval into an assembly-line process. The memory controller simultaneously fetches one or more words from memory, sends them to the CPU, and writes back words to the memory cells. When burst mode and pipelining work together, they can significantly reduce the delays caused by latency.

So, why wouldn’t you simply choose the fastest, widest memory available? The memory's bus speed and width should align with the system's bus. You can use memory built for 100 MHz in a 66 MHz system, but it will only run at the 66 MHz speed, rendering the extra speed useless. Additionally, 32-bit memory won’t fit onto a 16-bit bus.

Even with a wide and fast bus, it still takes longer for data to travel from the memory card to the CPU than for the CPU to process it. That’s where caches come into play.

Cache and Registers

Caches are designed to eliminate the bottleneck between the CPU and memory by providing instant access to frequently used data. This is achieved by integrating a small amount of memory directly into the CPU, referred to as primary or level 1 cache. Level 1 cache is quite compact, usually ranging from 2 kilobytes (KB) to 64 KB.

The secondary or level 2 cache is typically located on a memory card near the CPU, with a direct connection to it. A dedicated integrated circuit on the motherboard, known as the L2 controller, manages how the CPU uses the level 2 cache. Depending on the CPU, the size of the level 2 cache ranges from 256 KB to 2 megabytes (MB). In most systems, around 95 percent of the data needed by the CPU is accessed from the cache, significantly reducing the time the CPU spends waiting for data from the main memory.

In some lower-end systems, the level 2 cache is omitted entirely. However, many high-performance CPUs now incorporate the level 2 cache directly into the CPU chip itself. As a result, the size of the level 2 cache and its onboard (integrated with the CPU) placement are key factors in determining CPU performance. For more information on caching, see How Caching Works.

A specific type of RAM, static random access memory (SRAM), is primarily used for cache. SRAM uses multiple transistors, typically four to six, for each memory cell. It includes an external gate array known as a bistable multivibrator, which switches between two states (flip-flops). This eliminates the need for continuous refreshing like DRAM. As long as power is supplied, each SRAM cell retains its data. Due to the complexity of each cell, SRAM is too expensive to use as standard RAM, but its speed is unmatched.

SRAM in the cache can be asynchronous or synchronous. Synchronous SRAM is designed to operate at the exact speed of the CPU, while asynchronous SRAM is not. This subtle difference in timing affects overall performance. For optimal performance, it's best to use synchronized SRAM to match the CPU's clock speed. (For more details on various RAM types, see How RAM Works.)

The last component of memory is the registers. These are small memory units embedded directly within the CPU that hold specific data required by the CPU, especially for the arithmetic and logic unit (ALU). Registers are an essential part of the CPU and are directly managed by the compiler, which provides the data that the CPU needs to process. For further details on registers, refer to How Microprocessors Work.

For a convenient printable reference to computer memory, you can download the Mytour Big List of Computer Memory Terms.

Memory is commonly divided into two primary categories: volatile and nonvolatile. Volatile memory loses all its data as soon as the system shuts down; it needs continuous power to retain its contents. Most forms of RAM are classified as volatile.

Nonvolatile memory, however, retains its data even when the device or system is powered off. Examples of nonvolatile memory include ROM and Flash memory storage devices like CompactFlash and SmartMedia cards.