What’s the reason behind 5280 feet in a mile, and how do nautical miles differ from the standard miles used on land? Why are milk and gasoline sold by the gallon? What’s the origin of the abbreviation “lb”? Dive into the intriguing stories behind some of the most commonly used units of measurement in the United States.

1. The Mile

The mile owes its origins to the Romans. | Sycikimagery/Moment/Getty Images

The mile owes its origins to the Romans. | Sycikimagery/Moment/Getty ImagesThe idea of the mile dates back to ancient Rome. The Romans measured distance using the mille passum, meaning “a thousand paces.” Each pace equaled five Roman feet—slightly shorter than today’s feet—resulting in a mile of approximately 5000 Roman feet, or about 4850 modern feet.

If the mile started as 5000 Roman feet, how did it grow to 5280 feet? The answer lies in the furlong. Originally more than just a term for horse racing, the furlong represented the distance oxen could plow in a day. In 1592, English lawmakers defined the mile as eight furlongs. Since a furlong was 660 feet, the mile became 5280 feet.

2. The Nautical Mile

The nautical mile stands as a unique unit of measurement. | Roc Canals/Moment/Getty Images

The nautical mile stands as a unique unit of measurement. | Roc Canals/Moment/Getty ImagesWhile the statute mile traces back to Roman traditions and oxen plowing, the nautical mile has a different origin. To understand it, revisit your high school geometry. A nautical mile was initially defined as one minute of arc along the Earth’s meridian. Imagine the Earth’s meridian divided into 360 degrees, with each degree split into 60 arc minutes. Each minute represents 1/21,600th of the Earth’s circumference, making a nautical mile equal to 6076 feet.

3. The Acre

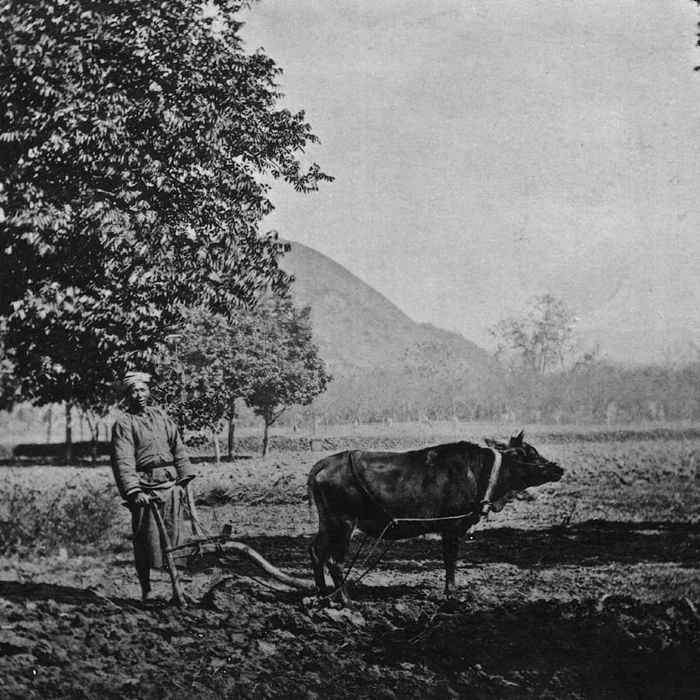

In theory, this ox could plow an entire acre in a single day. | Hulton Archive/GettyImages

In theory, this ox could plow an entire acre in a single day. | Hulton Archive/GettyImagesSimilar to the mile, the acre’s origins are tied to the furlong. A furlong represented the distance oxen could plow in a day without stopping. The term “acre” comes from an Old English word meaning “open field” and originally referred to the amount of land a single farmer with one ox could plow in a day. Over time, the Saxons in England defined this area as a strip of land one furlong long and one chain (66 feet) wide, resulting in the modern acre of 43,560 square feet.

4. The Foot

Not every human foot measures exactly 1 foot in length. | Construction Photography/Avalon/GettyImages

Not every human foot measures exactly 1 foot in length. | Construction Photography/Avalon/GettyImagesAs the name suggests, historians believe the foot was originally based on the length of a human foot. The Romans used a unit of measure called a pes, divided into 12 smaller units known as unciae. The Roman pes was slightly shorter than today’s foot, measuring about 11.6 inches. Early English units based on foot length were also shorter than the modern 12-inch foot. The 12-inch foot became standard during the reign of Henry I of England in the 12th century, leading some to speculate it was based on the king’s foot size.

5. The Gallon

Gallons of milk, not wine. | John Greim/GettyImages

Gallons of milk, not wine. | John Greim/GettyImagesThe term 'gallon' traces back to the Roman word galeta, meaning “a pailful.” Over time, various gallon measurements emerged, but the U.S. gallon likely derives from the “wine gallon” or Queen Anne gallon, standardized in 1707 during her reign. This gallon measured 231 cubic inches, and some historians suggest it was based on a container meant to hold eight troy pounds of wine.

6. The Pound

An antique scale with two 1-pound weights. | Mark Polott/Photodisc/Getty Images

An antique scale with two 1-pound weights. | Mark Polott/Photodisc/Getty ImagesThe pound, like many other units, traces its origins to ancient Rome. It evolved from the Roman unit libra, which is why “lb” is its abbreviation. The term pound itself derives from the Latin pondo, meaning “weight.” The avoirdupois pounds we use today were established in the 14th century by English merchants, who created this system to trade goods by weight instead of volume. They defined the new unit of measure as equal to 7000 grains, an existing standard, and divided each pound into 16 ounces.

7. Horsepower

Horses are truly remarkable for their strength. | Wolfgang Kaehler/GettyImages

Horses are truly remarkable for their strength. | Wolfgang Kaehler/GettyImagesIn the early 1700s, steam engine inventors sought a way to measure their machines' power. James Watt devised a clever method by comparing engines to horses. After observing horses, he determined that an average horse could lift 550 pounds at a rate of one foot per second, equating to 33,000 foot-pounds of work per minute.

However, not all experts agree that Watt’s calculations were entirely scientific. One popular tale suggests that Watt initially tested his theory using ponies instead of horses. He observed that ponies could perform 22,000 foot-pounds of work per minute and estimated horses were 50% stronger, leading to his estimate of 33,000 foot-pounds per minute.