A digital sound projector

Image courtesy of Consumer Guide Products

A digital sound projector

Image courtesy of Consumer Guide ProductsIn the world of home theater, many people envision large setups with a big screen and sound emanating from an array of speakers. However, the typical home-theater configuration, featuring surround-sound speakers and a subwoofer, isn't always practical. Some homes lack the space for all that gear, while others avoid the mess of wires and the complexity of speaker placement adjustments.

This is where virtual surround sound steps in. It replicates the experience of a multi-speaker surround-sound system using fewer speakers and minimal wiring. There are two main types of virtual surround systems — 2.1 surround and digital sound projection. Generally, 2.1 systems use two speakers placed in front of the listener along with a subwoofer located elsewhere in the room, providing the experience of a 5.1 surround-sound setup, which includes five speakers and a subwoofer. In contrast, digital sound projectors often feature a single strip of small speakers to produce sound, and many do not come with a subwoofer.

Despite their differences in design, these systems operate on the same basic principles. They employ various techniques to manipulate sound waves so that the audio appears to come from more speakers than are actually present. These methods are based on psychoacoustics, the study of how humans perceive sound. In this article, we will examine the characteristics of human hearing that allow two speakers to create the illusion of five, as well as provide tips for choosing the right virtual surround-sound system.

Understanding Sound Perception

Virtual surround-sound systems leverage fundamental principles of speakers, sound waves, and hearing. A speaker functions by converting electrical signals into sound through the movement of a diaphragm—a cone that vibrates back and forth, pushing and pulling the surrounding air. As the diaphragm moves outward, it generates a compression, an area of higher pressure in the air. When it retracts, it creates a rarefaction, an area of lower pressure. For more on this process, check out How Speakers Work.

Compressions and rarefactions occur due to the movement of air particles. When these particles collide, they cause higher pressure areas, pressing against adjacent molecules. As the particles move apart, they create lower pressure areas by pulling away from nearby particles. This alternating pattern of compressions and rarefactions travels through the air as a longitudinal wave.

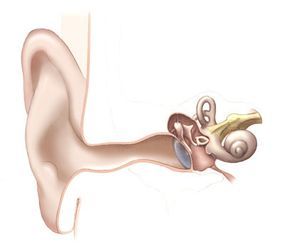

When this wave of varying pressure reaches your ear, several steps occur that allow you to perceive it as sound. The wave reflects off the pinna, the outer part of your ear, also known as the auricle. The sound then travels down the ear canal, causing the tympanic membrane, or eardrum, to vibrate. These vibrations trigger a cascade of tiny structures within the ear, and ultimately, the vibrations are transferred to the cochlear nerve, which sends them to the brain (brain.htm) as nerve impulses. Your brain processes these impulses and interprets them as sound. For more about the inner workings of your ear and how you perceive sound, see How Hearing Works (hearing.htm).

Your brain's ability to interpret these signals allows you to grasp the meaning behind the sound. If it's a sequence of spoken words, you can combine them into coherent sentences. If it's a song, you can understand the lyrics, feel the rhythm and tone, and even form an opinion about what you're hearing. Additionally, you can recall whether you've heard the same song or similar ones before.

Beyond helping you interpret sound, your brain also relies on various aural cues to determine its origin. This process is often subconscious, something you don't actively think about. However, the ability to pinpoint where a sound comes from is crucial. For animals, this skill is vital for finding food, evading predators, and locating members of their species. For humans, it helps you assess if someone is following you or whether a knock at the door is from your neighbor or your own house.

These aural cues, along with the physical nature of sound waves, play a central role in virtual surround sound technology. We'll explore these concepts further in the next section.

One way to simulate a virtual surround-sound experience is through a system known as Dolby Virtual Speaker. This is a set of algorithms and rules designed to replicate multi-channel sound for devices equipped with just two standard speakers. It is typically found in certain TVs, stereo systems, and computers, rather than as a separate component of a home-theater setup. Another similar technology is Dolby Headphone, which uses advanced sound-processing algorithms to make a regular pair of headphones replicate the effect of surround-sound speakers. To learn more about other Dolby innovations, check out How Movie Sound Works.

Understanding Sound Cues and Virtual Surround Sound

Many people have experienced a moment of sudden silence, such as in a classroom during a test, only for that quiet to be shattered by an unexpected noise, like change dropping from someone's pocket. Instinctively, people often turn their heads toward the sound's origin. This immediate response demonstrates how quickly your brain can identify the sound's location, even if you only hear with one ear.

The brain determines the location of a sound by analyzing various characteristics of the sound. One of these is the difference in what your right and left ears hear. Another factor involves how sound waves interact with your head and body. These combined aural cues are what the brain uses to identify the source of the sound.

Picture a scenario where the coins from our quiet classroom example fall somewhere to your right. Because sound travels as physical waves through the air — a process that requires time — it reaches your right ear slightly earlier than your left. Additionally, the sound reaches your left ear at a lower volume due to the natural dissipation of the sound wave and the absorption and reflection by your head. The volume difference between the left and right ears is known as the interaural level difference (ILD), while the time delay is referred to as the interaural time difference (ITD).

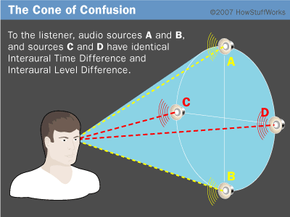

The time and volume differences give your brain a good sense of whether a sound is coming from the left or right. However, these differences provide less information about whether a sound is coming from above or below you. This is because when a sound's elevation changes, it affects the path it takes to your ear, but not the left-right ear difference. Additionally, it can be challenging to tell whether a sound is in front or behind you based solely on time and level differences. This is due to the fact that sounds coming from different directions may produce identical ILDs and ITDs. These identical ear differences occur in a cone-shaped area from your ear, called the cone of confusion.

While ILDs and ITDs are important for sound localization, individuals with hearing loss in one ear can still often figure out the direction of sound. This is possible because the brain can rely on the reflection of the sound off surfaces in the working ear to estimate its source.

Your brain can determine the location of a sound by analyzing how its waves are reflected off the pinna, or auricle, of your ear.

Image courtesy of the National Institute on Deafness and Other Communication Disorders

Your brain can determine the location of a sound by analyzing how its waves are reflected off the pinna, or auricle, of your ear.

Image courtesy of the National Institute on Deafness and Other Communication DisordersWhen a sound wave reaches a person, it bounces off their head, shoulders, and the curved outer ear. These reflections slightly alter the sound wave. The interaction of these reflected waves can cause some parts of the wave to amplify or diminish, changing the sound's volume or tone. These alterations are referred to as head-related transfer functions (HRTFs). Unlike ILDs and ITDs, the sound's elevation — whether it comes from above or below — influences the way it reflects off the body. The direction of the sound, whether from the front or behind, also affects these reflections.

HRTFs have a subtle yet complex influence on the shape of the sound wave. The brain decodes these variations in the wave’s shape, helping determine where the sound originated from. In the next section, we’ll explore how researchers have studied this phenomenon and applied it to develop virtual surround-sound systems.

Exploring Sound Reflections

The outer ear, or auricle, contains various surfaces that can reflect sound waves. Many of these surfaces are curved, which can direct sound toward other parts of the ear, making the wave bounce multiple times before reaching the eardrum. The interactions with the head, face, hair, and torso further complicate this process. Measuring these reflections manually would be incredibly difficult. For this reason, scientists have used sound sources, microphones, and computer programs to study head-related transfer functions (HRTFs).

In certain experiments, researchers have placed small microphones on the bodies of human subjects. In other cases, they've used realistic mannequins that closely resemble human skin, cartilage, and body proportions. One such mannequin, the Knowles Electronic Manikin for Acoustic Research (KEMAR), has been used in HRTF studies at institutions like the MIT Media Lab.

The sole purpose of these microphones is to record sound. Computers then analyze the slight variations in sounds, whether they're due to different sound sources or the way a single sound interacts with different areas of the body. This analysis ultimately leads to an algorithm — a collection of rules that explains how the HRTFs and other variables have altered the shape of the sound wave. When this algorithm is applied to a new sound wave, it reshapes it, giving it the same characteristics as the original wave after interacting with the person's body.

Algorithms like these form the core of virtual surround-sound systems. Here's how it works:

- Microphones are used by researchers to record and study the sound produced by a 5.1-surround speaker system. Often, these studies include participants with various ear and body shapes to understand how different people perceive the same sound.

- Researchers then use a computer to develop an algorithm capable of recreating that sound.

- This 5.1-channel algorithm is then applied to a two-speaker setup, simulating the sound environment that a real 5.1-channel speaker system would produce.

A Polk 5.1 surround-sound SurroundBar

Image courtesy Polk Audio

A Polk 5.1 surround-sound SurroundBar

Image courtesy Polk AudioIn essence, this process adds aural cues to the sound wave, tricking your brain into perceiving the sound as if it originated from five different sources instead of just two.

Virtual Surround Sound Tools and Techniques

A Sony 2.1 surround-sound system with subwoofer and receiver/amplifier

Image courtesy Consumer Guide Products

A Sony 2.1 surround-sound system with subwoofer and receiver/amplifier

Image courtesy Consumer Guide ProductsBeyond the natural interaction between sound waves and the human body, virtual surround-sound systems employ various techniques to simulate 5.1-channel audio. Some systems, particularly those with digital sound processing, bounce sound waves off of the room's walls. This reflection makes it seem as though sound is coming from behind you as it bounces off the wall behind your head. Such systems may require you to input your room’s dimensions or calibrate the speakers with a microphone to ensure the reflections occur at the correct angles and positions.

Many two-speaker systems use crosstalk cancellation, which leverages destructive interference to prevent unwanted overlap between sounds intended for the left and right ears. This minimizes the chance of your ears hearing each other’s sound cues, preserving the illusion of multi-speaker sound.

The algorithms and crosstalk cancellation methods rely on a computer processor, typically located in the receiver/amplifier. This device includes a sound-processing chip that applies the algorithms to sound waves in real time. Just like any amplifier, it receives sound data from a source, such as a satellite box or DVD player. It processes the sound, adjusts the volume and quality, and then sends the output to the speakers. In some systems, the receiver/amplifier is integrated into the speaker units themselves.

The main drawback of virtual surround-sound systems is that the immersive experience they provide is a simulated effect, not one generated by multiple speakers. To maintain this illusion, you must be in the optimal position, often referred to as the sweet spot, and face the television screen directly. Moving too far to either side can break the illusion, leaving you outside the intended sound field. Occasionally, sounds that shift from one side to the other, or from front to back, might sound distorted or unnatural. Since these systems only use two speakers, the sound field typically lacks the fullness and impact that a true multi-speaker setup would offer.

Here are a few factors to consider when purchasing a virtual surround-sound system:

- Room size and shape: Digital sound systems, which rely on sound reflections, may not perform well in very large or oddly shaped rooms with unconventional walls.

- Desired effect: If you’re aiming for powerful, immersive sound throughout the room, a two-speaker system might not meet your needs.

- Subwoofer: 2.1 systems include a subwoofer, but many digital sound systems don’t. You can buy a separate subwoofer if you want deeper bass.

- Setup requirements: Some virtual surround-sound systems are plug-and-play, while others may require calibration, such as measuring the room size.

- Price: While some 2.1 systems are an affordable alternative to 5.1 or 7.1 systems, high-end digital sound-projection setups can cost upwards of $1500.